Tracing the sky

INTERACTIVE PROJECTION MAPPING

- Project

- Installation

Arts

- Technologies

- Augmented Reality

ARCore

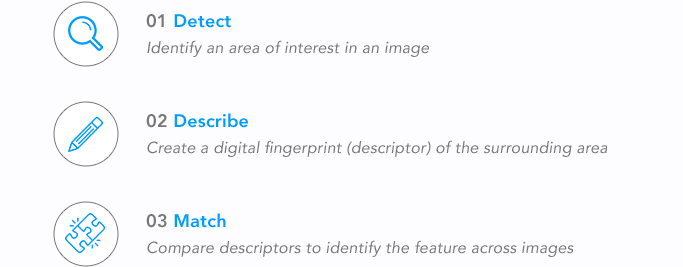

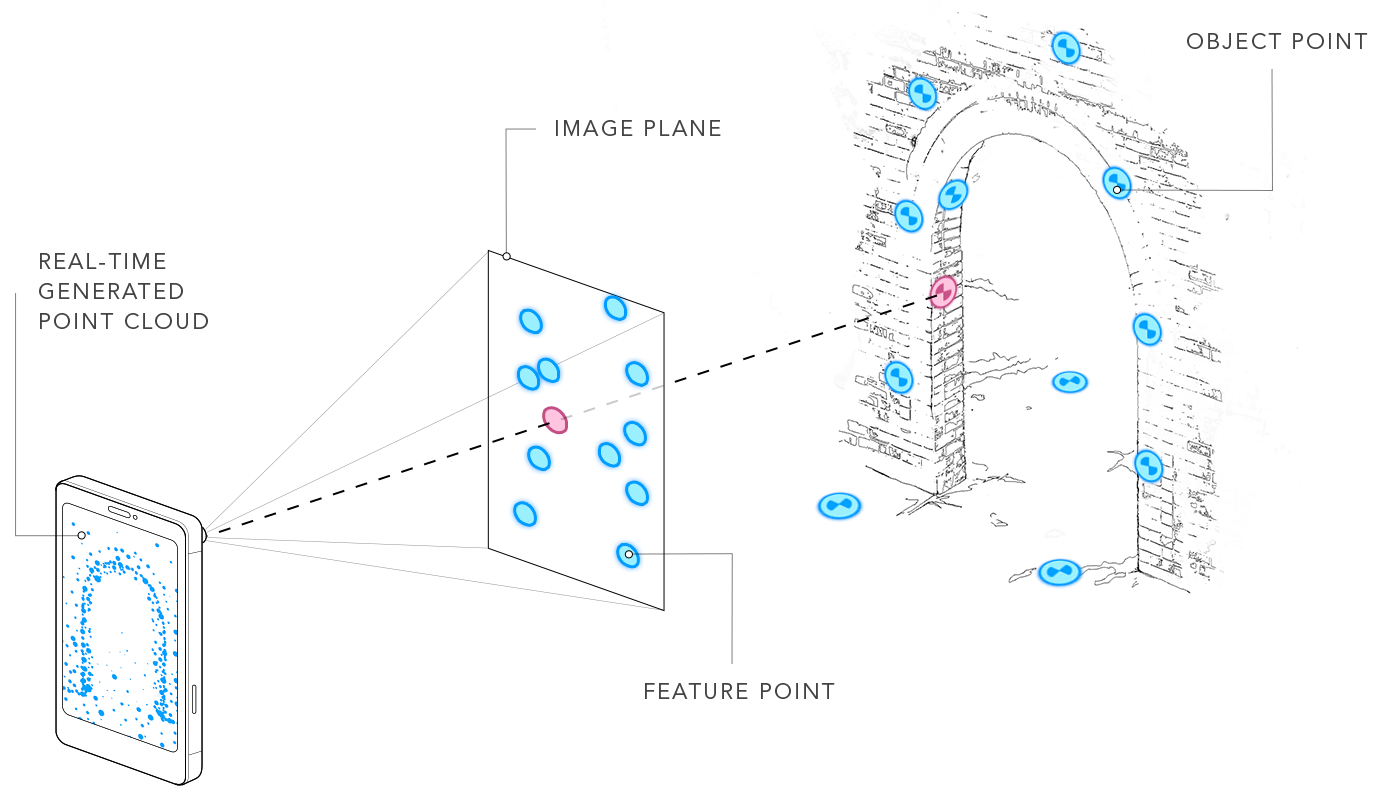

Computer Vision

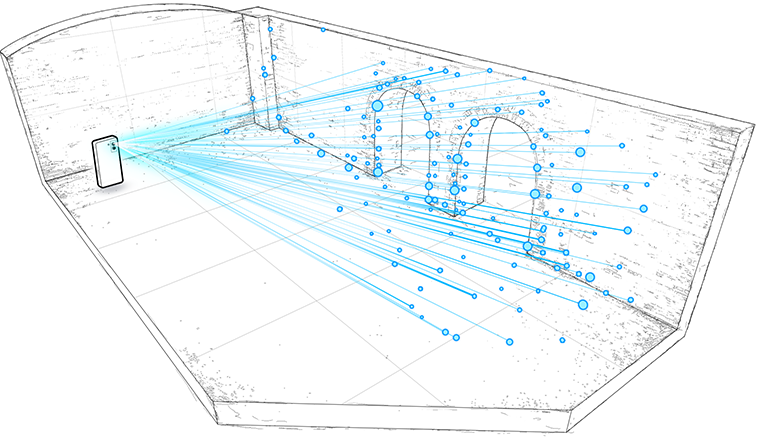

Visual Odometry

Visual SLAM

Networking

Unity

An underground hideout was transformed into a mesmerising immersive and interactive space. Using AR to influence a projection mapping installation...

Phones were tracked as they moved around the space, enabling visitors to interact with fluid visuals projected on the room. Moving the phone through the air or swiping the screen repelled particles projected within view, influencing how they flowed across physical surfaces while visualising the user’s gestures being drawn over the room.

The installation was part of Distractions, a tech summit presented by Manchester International Festival of arts.